Dual Pixel RAW is Canon’s new invention that will see its first release with the EOS 5D Mark IV. There’s some vague marketing info floating around, but haven’t seen a concise description of these files yet. So while updating Kuuvik Capture’s (website, my posts) RAW decoder to support the 5D Mark IV, I had a chance to dig deeper into Dual Pixel RAWs.

To understand the following discussion, you need to know how Canon’s Dual Pixel AF works, especially how these Dual Pixels are divided into two separate photodiodes. This article by Dave Etchells gives you a thorough explanation.

What is a Dual Pixel RAW file?

Normal CR2 files contain the following sections:

- Metadata

- Previews

- RAW data

The DPRAW file is a CR2 file that contains one more additional section:

- Metadata

- Previews

- RAW data

- DPRAW data

This organization have a very important implication. Any RAW processing software that does support the normal 5D Mark IV files will be able to open DPRAWs. If the app is unable to interpret the DPRAW data part, it will simply ignore it and will work with the file as a normal RAW. There’s no risk or penalty in taking DPRAWs (besides the huge buffer drop from 21 to 7 frames).

The DPRAW file contains the normal RAW data section to make this compatibility possible, plus one side of each pixel in the DPRAW data section.

The RAW data section contains pixel values with the sum of left and right side photodiodes, while the DPRAW section contains pixel values from just one photodiode of the two.

But how do we get the other side of each pixel to let Dual Pixel aware processing apps do their tricks? It’s easy: since the RAW pixel value is the sum of left and right pixel sides, just subtract the DPRAW pixel value from the RAW pixel value.

This is an unusually clever implementation from Canon, where I’m used to see all kinds of inflexible hacks that look like as if they were designed in the 1980s.

Size-wise, DPRAW files are slightly less than double the size of normal RAWs (since metadata and preview images are stored only once).

How will Kuuvik Capture 2.5 handle DPRAWs?

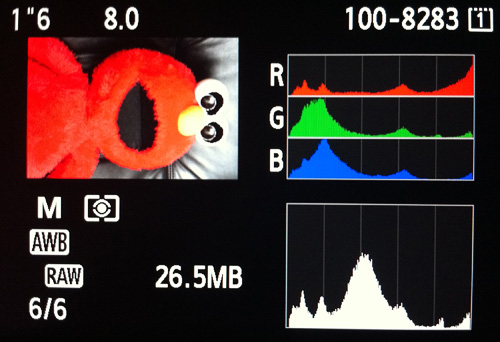

Not being a RAW converter, Kuuvik Capture needs the RAW data for two purposes: the RAW histogram as well as shadow/highlight warnings (the image displayed on the screen comes from the preview embedded in each CR2 file). For these the RAW data section is totally sufficient, and the app will ignore the DPRAW data section if present in a CR2 file.

The app will display normal RAW and DPRAW files equally fast, but downloading DPRAW files from the camera will take almost twice as much time as normal RAW (because of their larger size).

I assume that there will be a possibility to switch the camera into DPRAW mode remotely (I can’t be sure until my rental unit arrives). If that is the case, then a new preference will let you specify whether you’d like to shoot RAWs or DPRAWs.

For the mathematically inclined, usable bit depth is calculated with the formula:

For the mathematically inclined, usable bit depth is calculated with the formula: